Article

Beyond Commodity: Why Healthcare

Data is Now a Strategic Asset

Healthcare data is a necessity in drug commercialization and those looking to leverage it need to consider certain attributes when vetting a data aggregator.

In part one of this two-part series, we will discuss the key attributes you should look for when evaluating your partner’s data strategy and network. Part two will focus on the specific criteria you need to consider and the critical questions you need answered to achieve commercial excellence.

The U.S. healthcare system is diverse, complicated, dynamic, and often unpredictable. Effectively navigating this labyrinth is key for brands as they aim to provide the right message to the right HCP at the right time.

Healthcare data first emerged as an accessible, low cost, and reliable tool for commercial programs. While the accessibility of data has changed rapidly since then, today it is a necessity in drug commercialization.

But, the data market is much different than it was 20, 10, or even 5 years ago. Data is more expensive, sources are less available, and the market is less stable and predictable. Data is no longer a commodity: it is a strategic asset that demands focus and recurring investment. Those seeking to leverage this data need to be thoughtful in their data strategy, and should consider the following attributes when vetting a data aggregator:

- Privacy-safe

- Quality

- Reliable

- Aligned with industry needs

Ensuring data privacy

Privacy doesn't end with the patient — it also applies to data and covered entities. It’s important to note that for many data suppliers, data isn't their core business. This leaves the data vulnerable to compromise or misuse. When this occurs, it harms the entire commercial data ecosystem. As a result, covered entities become less likely to partner, which severely hampers the industry's ability to understand patients longitudinally.

Simply put, privacy should be at the center of an aggregator's work. A robust privacy and compliance program is critical to protecting the trust between a supplier and an aggregator. More importantly, it ensures the data is being used responsibly and in ways that promote sustainability and longevity.

Beyond implementing a structure for security, data aggregators must act with purpose. Instances of broken trust and companies acting against their own agreed-upon standards of data use are unacceptable practices that damage the industry. They also surface an interesting consideration when vetting a data aggregator: does the company have a track record of keeping promises, and do you trust them to do the right thing?

Providing quality

Data is only valuable when its quality is addressed and ensured through technical guardrails. Today, conventional industry solutions de-identify and combine patient records. This process does not handle data entry errors well or accurately link patients.

Veeva SafeMine, however, uniquely approaches patient identity by matching and de-identifying records. Compared to industry standard tokenizers, SafeMine is 8.5 times better at reducing over-matching, leading to much more accurate datasets. Additionally, SafeMine is 5 times better at reducing under-matching, leading to more complete datasets. What does that mean for you? More precise and complete datasets equate to better decision-making, better precision, and better allocation of resources — all leading to better commercialization efforts.

Building a reliable network

In 2024, a cyber attack on a large billing services provider and a market pullback tied to privacy and compliance concerns impacted billions of transactions. This impact was universal, and no data provider emerged unscathed. However, those with networks designed by strategically overlapping numerous data providers were able to mitigate the effects of the disruption. Those with strong, healthy relationships with data originators were less vulnerable to market pullback due to trust and a mutual appreciation for the sensitivities of the commercial data market. These two characteristics proved critical to data aggregators and the customers they serve.

Direct relationships

While licensing with a re-licensor of data is a shortcut to quickly obtaining more volume, it eliminates aggregator’s ability to manage and work with the individual sources. This means the aggregator has no control over whether a singular data source stays or is removed from their data network. They are also unable to inquire about additional characteristics that can make the data they receive more meaningful for their customers. Thus, those who license from re-licensors and their customers are at the mercy of the re-licensor’s ability to retain suppliers, creating a shaky foundation for their data network. This is part of what makes Veeva’s data network different. One hundred percent of the data in our network is sourced directly from originators, and a large proportion of our network overlaps, allowing us to stay close to the data and limit risk of downstream impacts from market events. While licensing directly from an array of data providers is more complex, time consuming, and costly than going to a single source, it yields greater speed, quality, and reliability in data used by customers.

The role of trust

Health data is a precious resource that we have seen misused in the market. Relicensing to suppliers’ competitors, revealing the names of suppliers, and skimping on privacy has sowed distrust within the industry. That distrust is bad for everyone, especially for:

- Those who trust aggregators to do the right thing

- Aggregators who face a more difficult data licensing market

- Customers who depend on aggregators for their data

- Patients whose data is being mishandled

Above and beyond privacy protections and an unwavering commitment to agreed-upon use cases are foundational to trust and critical to building a reliable network. Veeva has been successful in building a data network that includes a diverse set of sources of medical, retail, and specialty pharmacy data and has experienced minimal turnover in suppliers throughout the existence of our network.

Staying ahead of industry trends

In the early 2000s, basic questions could be answered with simple data. With only a few aggregators and distributors, data was slow, retail-focused, and inflexible. This straightforward and basic approach to understanding patient activities worked well for a period, but as medicines and pathways to treatment grew more complex, the data did not keep pace.

New media channels and evolving patient behaviors have led marketers to strive for omnichannel marketing excellence. The goal is to provide a consistent experience for their patients in whatever way they interact with their brand. In this new world, more decision-makers and influencers are being included, and further stratification of go-to-market models has contributed to a demand for data that accounts for these nuances. At the same time, the broad availability of data and the adoption of universal patient tokens have allowed numerous new data aggregators and analytics firms to emerge.

Today, biopharma organizations heavily rely on data aggregators for packaged, analytics-ready data sets. As opposed to depending on large internal data science functions, biopharma looks to market leaders such as Veeva to reduce their resource needs and alleviate the compliance and privacy headaches. Compass Data Connector ingests data from data partners on behalf of customers, and applies the full power of the Veeva Compass pipeline to each data type. This approach reduces the risk and lowers the burden of managing the HIPAA determination process on behalf of biopharma.

Now that the data is more layered, choices are numerous, and the industry has evolved multiple times in just the last decade, how do you define and identify a quality data source? How do we consider the needs of our brands today while also weighing the implications of how our organizations invest R&D dollars? Simply put, what does ‘good’ look like?

Case in Point: Specialty Pharmacy

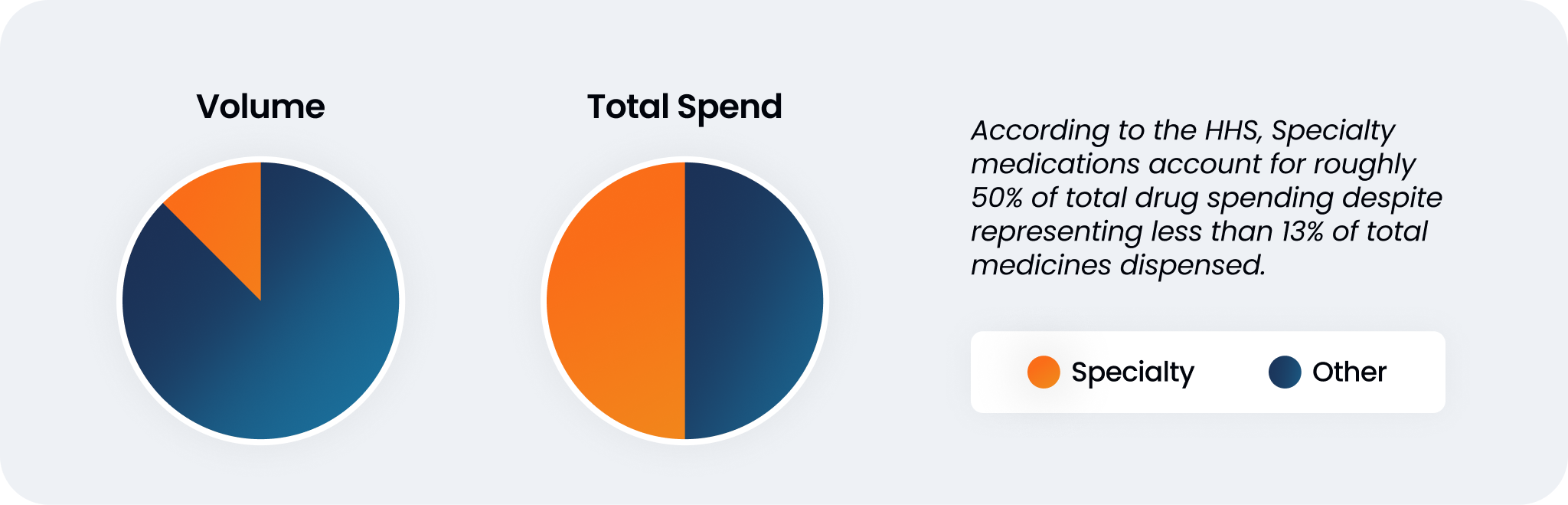

While broad coverage of a dataset can be helpful, there are instances in which other factors should be taken into account. Consider specialty medications: high-cost medications that treat rare, complex, and/or chronic health conditions. While specialty medications make up a relatively small portion of total medicines dispensed, the cost of these medications makes up about half of total drug spend in the country.

This reflects the importance of vetting a dataset not only on its coverage, but of its contents. A large dataset with only retail pharmacy omits specialty medication data entirely. A healthy dataset considers the entire biopharma landscape and accounts for nuances in the market.

The future of health data

As in decades past, the demand for and use cases of data will continue to evolve and mature. Those who are not proactive will fall behind. Here are some of our predictions for the future of health data:

Data continues to grow, but also grows in scarcity

As technology becomes more advanced and accessible, more data and data types will emerge. These new sources of data will prove to be great assets to savvy stakeholders in the biopharma industry who rely on data to make more informed commercialization decisions. At the same time, however, data is becoming harder to source. Originators of the data will become more selective with whom they license their data to.

Growing specialty drug utilization will further shift focus from retail

In the 1990s, less than 30 specialty drugs were available. Today, they account for roughly 75% of novel drugs approved since 2020. Specialty drugs also represent about 75% of the approximate 7,000 new drugs under development.

Achieving a longitudinal view becomes more complex

Recent market events have shaken the industry. As a result, data originators are now more sensitive to where they license their data. Trusted partners with a track record of partnership and high ethical standards will have an advantage over others. Achieving longitudinality remains essential despite the shrinking patient populations for high-cost medicines, and understanding the patient in multiple settings is paramount.

Shrinking competition and stifled innovation

Increased sensitivity from data originators will inevitably result in a tighter data supply and thus less competition in the data aggregation space. Those who remain and the customers they serve will dictate the momentum for innovation.

Closing

Data is an incredible tool with seemingly endless use cases for the biopharma industry. Finding the right audience, understanding healthcare trends, and mapping patient activities are now critical as we continue to dive deep into more rare/niche diseases with more complex medicine. But with so much out there, it’s essential to recognize and advocate for best practices regarding your data supplier. Size and scope are assets, but it’s essential to find out:

- Is the dataset tailored to meet the needs of today’s market?

- Is the data aggregator fully committed to the high capital expenditure required to make their data product(s) last for the long term?

- Is the dataset strategically sourced from various sources, or were shortcuts taken in building the asset?

- What measures are being taken to ensure the quality and security of the underlying data?

Without asking these questions, the availability of quality commercial data will become more scarce and challenging. This will result in the industry’s inability to better understand patients, hindering drug manufacturers from getting the right message to the right HCP at the right time.

Discover how Orchard Therapeutics is leveraging modern data for accelerating ultra-rare disease diagnostics.

Learn more about the Veeva Compass Suite.